As of August 2nd, 2025, the EU AI Act’s provisions for General Purpose AI (GPAI) models are officially in force. With the release of the GPAI Guidelines, the European Commission has delivered long-awaited clarity on how these new rules apply in practice. The guidelines help developers, deployers, and other stakeholders across the AI value chain understand their responsibilities and innovate confidently within the EU regulatory framework.

On this page, you'll find:

- A concise summary of the most important rules, definitions, and obligations introduced by the AI Act,

- Our practical tips and implementation guidelines to help you embed these requirements into your internal AI governance processes effectively and sustainably.

Whether you're building, integrating, or auditing AI systems, our goal is to help you move from regulation to action - faster and with greater confidence.

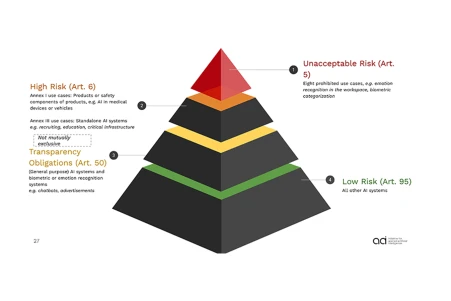

The EU AI Act is the world’s first comprehensive regulation for AI systems. It categorizes them by risk - from minimal to high - and defines clear requirements. Businesses using or developing AI, especially in sensitive areas like healthcare, HR, or public services, must meet strict safety and compliance standards. But understanding the Act in practice can be complex. Companies are asking:

-

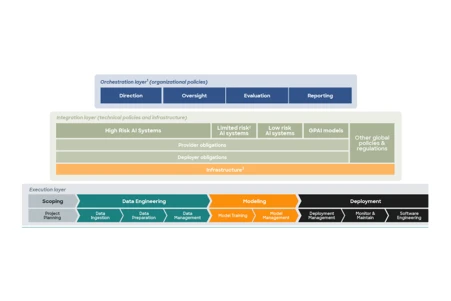

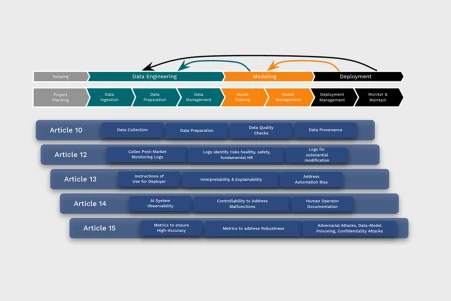

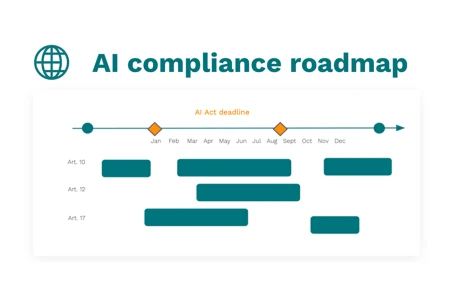

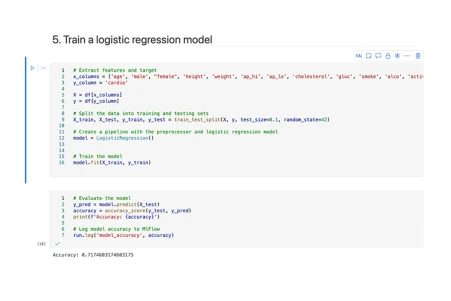

How do we apply Articles 9–15 at both use case and company level?

-

How do we govern and deploy generative AI compliantly?

-

Which process changes and roles are essential to meet legal and technical standards?

-

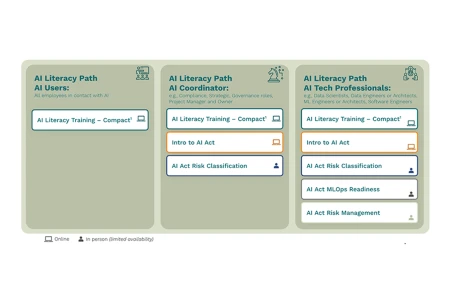

How do we upskill employees for the Act’s AI literacy expectations?

-

How can AI risk management be integrated into daily operations?

At appliedAI, we help companies answer these questions — practically, efficiently, and tailored to their needs.

The EU AI Act isn’t just about compliance. Done right, it’s a chance to:

- Improve internal AI processes and risk awareness.

- Build trust with customers, regulators, and partners.

- Identify and scale high-value AI systems confidently.

- Strengthen ethical use of technology across your organization.

- Lay the foundation for global AI readiness.

Especially for SMEs, the AI Act offers a structured path to responsible AI development. This decreases uncertainty and provides clarity for a long-term strategy for your company.

We’ve worked alongside leading organizations to:

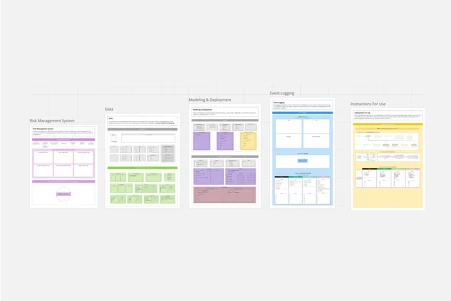

- Classify and manage risk across diverse AI use cases.

- Establish clear AI governance and accountability structures.

- Embed AI risk management at scale.

- Prepare for audits and evolving standards like ISO 42001 and ISO 38507.

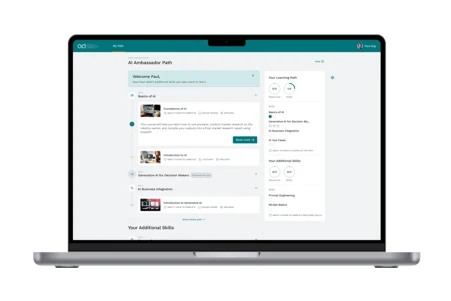

- Build internal capability through practical training and long-term support.

We bring deep experience and a European perspective — helping you align with the EU AI Act, meet stakeholder expectations, and drive responsible innovation.

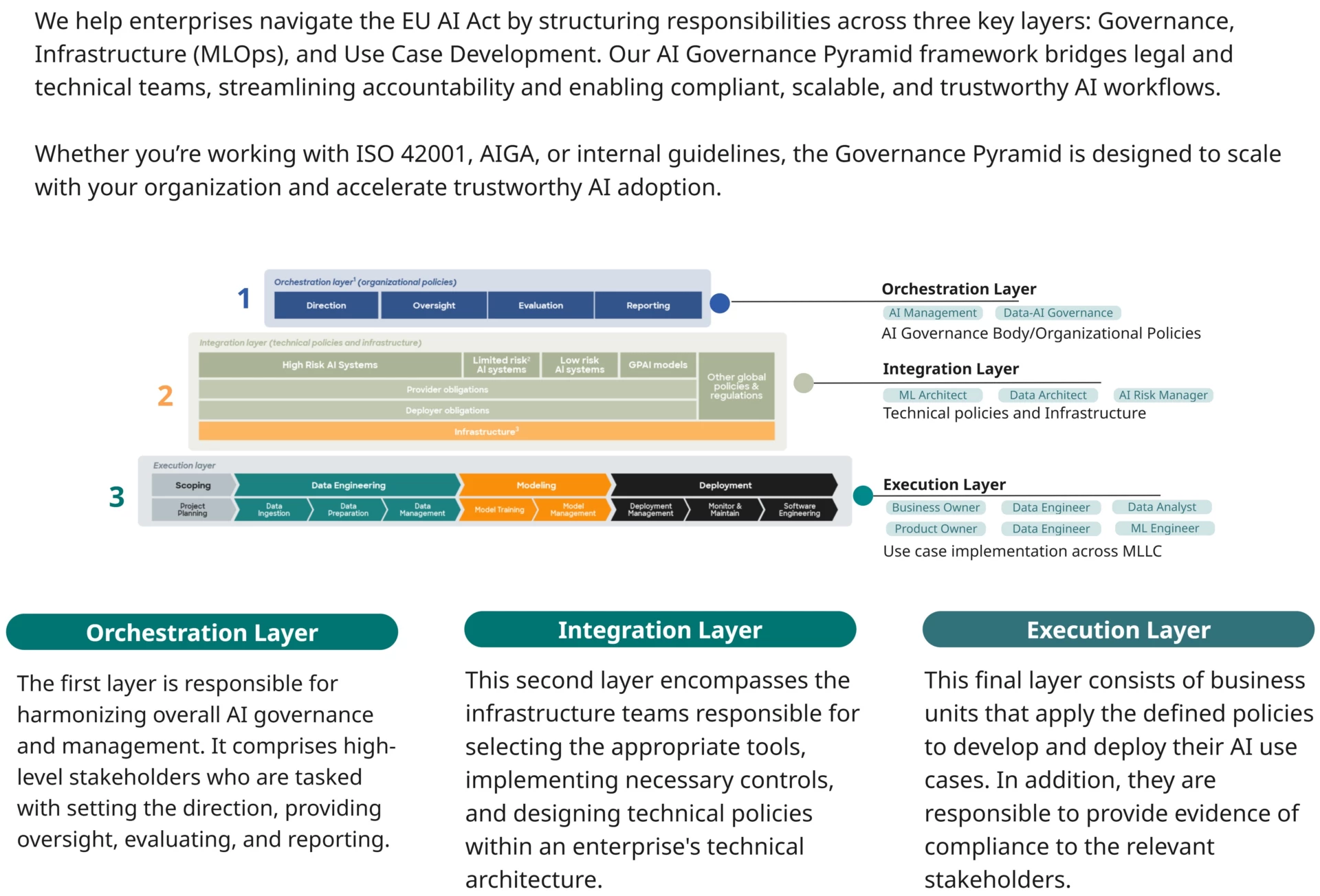

Building Scalable & Cross-Functional AI Act Governance