AI is a disruptive technology that affects all companies, regardless of their industry or size. Entire business sectors are already being extensively transformed by AI. Many companies have begun investing in data and AI initiatives, but few are realizing significant business value. Often, AI initiatives are not aligned with the company's overall strategy and it remains unclear how applying AI will add business value. There is a widespread lack of knowledge about what foundations are required for implementation and the order in which challenges should be addressed.

To create value through the application of AI, your company should systematically reach a higher level of AI maturity. This will enable your company to remain competitive and to actively achieve competitive advantages through the application of AI. appliedAI offers you support in the AI transformation of your company: from the analysis of the competition to the definition of a suitable target for the application of AI. In doing so, we consider not only technical aspects but also organizational and personnel aspects and, thus, develop a holistic AI strategy.

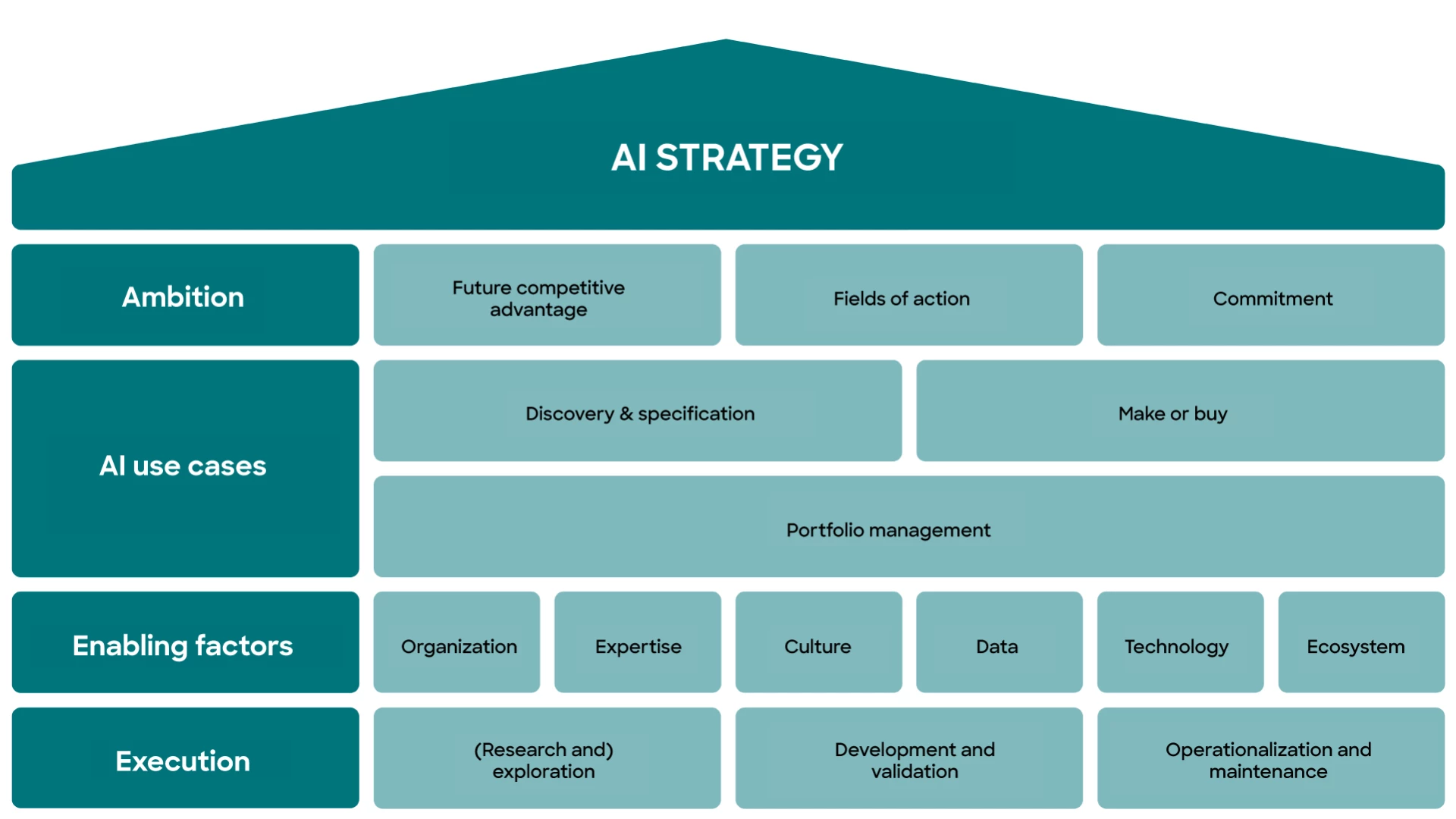

We draw on our proven approaches, which we have already used to support many companies, among them EnBW and MTU Aero Engines, in their AI transformation. For this purpose, we have developed the appliedAI AI strategy house, which considers all relevant dimensions necessary for a successful AI transformation: starting with the AI ambition and the use case portfolio to the enabling factors such as the organizational design, expertise, data and technology, and execution competence.

In order to achieve value creation through Artificial Intelligence, your company must embark on a journey towards higher AI maturity. This will enable your company to gain a competitive advantage through the use of AI.

appliedAI systematically supports you in the AI transformation of your company: from developing a concrete transformation plan - the AI journey - to deriving a target image and providing support in organizational design. We rely on our well-proven approaches, which have helped companies such as EnBW and MTU in their AI transformation.

AI maturity assessment and identification of required actions

Our systematic support in your company's AI transformation includes an evaluation of the status quo as well as the derivation of an action plan for the implementation of the AI strategy.Assessment of the current AI maturity level of your company

Systematic and targeted identification of improvement potentials along the dimensions of our proven and successfully applied AI Strategy House

Definition of a one-year action plan based on the individual results of your AI maturity assessment

AI Agents have the potential to transform business processes by enhancing efficiency in research, analysis, and decision-making across various problem domains. But how can your organization successfully adopt and integrate AI Agents? Our AI Agent Kickstart Program offers a structured approach to identify opportunities, assess readiness, and develop a tailored implementation roadmap—ensuring AI Agents seamlessly align with your broader AI strategy.

Development of an AI ambition

We help you develop an AI ambition that is aligned with your company's overall strategy and serves as a target for managing your company-wide AI activities.Definition of an AI ambition that is understandable and tangible for all stakeholders and extends across all departments

Alignment of the external market perspective with the internal perspective to derive the future potential for your company

Embedding and alignment of the AI ambition with the overall strategy

Developing a Trustworthy AI Operating Model

Our Trustworthy AI Operating Model forms the backbone of a resilient and future-ready AI organization. We work with you to build a structure tailored to your AI maturity, ensuring scalable and compliant implementation:

- Establish a central AI competence center to systematically manage use cases

- Define clear governance structures, including roles and responsibilities

- Develop a practical framework for ownership, budget allocation, and resource planning

- Design training and upskilling initiatives to build AI capabilities across your organization

Read more

Ready to embark on an engaging exploration of your company's AI maturity level? Start by gaining a preliminary glimpse through our Quick Check - AI Maturity Compass. It’s free, quick to complete. After the test, you will receive an insightful report with the results via email.